As with any project, it is always important to be able to monitor the impacts of your work, however, within citizen science, this is not always the easiest thing to do. So the MICS project has developed an online tool to help citizen science projects measure their impacts, with a particular focus on the areas of society, governance, economy, environment, science, and technology, as well as providing resources for new and ongoing citizen science projects to increase the impact of their work.

We have invited Sasha Woods to shed more light on the MICS project and Platform. Sasha works as a Researcher for Impact and Innovation at Earthwatch, and was involved in developing the impact assessment methods, as well as dissemination and outreach for the MICS project.

Thank you for joining us, Sasha.

So my first question is, why is it so difficult to measure impact and evaluate the impact of the work being done in citizen science?

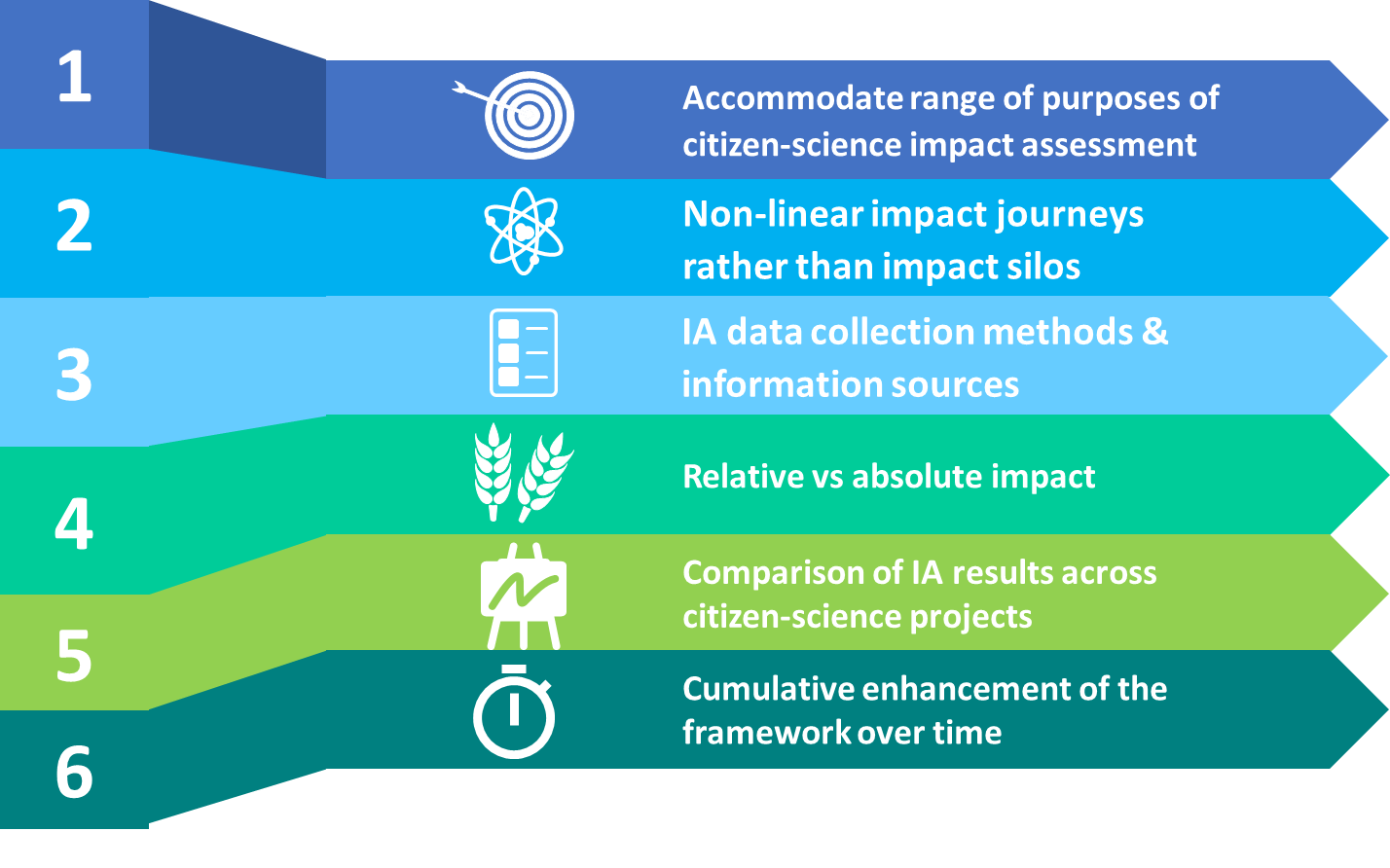

Firstly, it is difficult to define citizen science. We have ECSA’s 10 principles of citizen science, and the characteristics of citizen science, but there isn’t a single definition. And the same is true of impact; how do you define impact? Is impact different from outputs and outcomes; is it short-term or long-term; is it on the environment or on society?

In MICS, we consider impact related to the expected, concrete outcomes of an initiative (such as improved water quality in a water quality assessment project), and other intangible changes (for example, more pro-environmental attitudes of the participating citizen scientists).

Can you explain a little about how the MICS tool was developed, and how partners contributed to this co-development process?

The MICS tools were developed in two main ways. Firstly, a review of the literature on impact assessment (in citizen science and more generally) was used to build an initial framework. The state-of-the-art progressed with a more targeted literature review, which was led by IHE Delft. This was used to produce the MICS Online Guidance, which provides insights into the various impacts of citizen science and why it is important to measure them, how citizen-science initiatives can be co-designed for impact, and how to measure their impacts. The MICS Online Guidance guides users through available resources such as tools, scientific papers, training materials, and networks, also designed to help improve impact assessment.

The guidance was validated through our case studies which brought together community groups and local authorities to co-design new citizen-science initiatives around nature-based solutions. In Italy, led by AAWA, schoolchildren and other locals monitored the effects of wetlands on the water quality of the Marzenego River – even developing their own method for measuring levels of bacteria! In Romania, led by GeoEcoMar, citizen scientists investigated the effects of Danube wetland restoration on water quality and bank stability; whilst the project in Hungary surveyed water quality and biodiversity to promote the restoration of Creek Rákos – led by Geonardo (you may have seen us on the local news). In the UK, the RRC worked with two established citizen-science projects – Outfall Safari and Riverfly – to evaluate their impacts.

The second way we developed tools for impact assessment was to create a standardised approach, consisting of a set of questions that project managers can answer on the MICS platform to assess the impact of their citizen science project, at any stage during the project lifecycle.

So when users come to the platform, they are invited to answer a set of 200 questions about their citizen science project, and from these answers, the impact of the project is scored in 5 key areas: Science; Environment; Economy; Governance; Society.

Can you talk about how these questions developed, and how is your scoring conducted?

All the questions have been developed from the literature. If you go to https://about.mics.tools/questions you will find every question that forms the MICS platform impact assessment; complete with answer options, as well as help and information text.

We have also included the source(s) of the question, and how they were originally worded. What we have done with MICS is make the questions themselves more accessible, and provided a comprehensive set of answers that users can select from; something that was previously missing.

We’ve tested these questions on project coordinators across multiple types of citizen science projects, to make sure that they are understandable, and to make sure that they can answer the question with the options we provide.

We are constantly tweaking and improving the platform, so if a user emails us to say that they would have liked to answer with something different, we are happy to add options to the platform.

Another output the quiz produces is a list of recommendations and resources to help the user learn more about areas in their project that may have scored less well.

How were the recommendation created, were they taken from the MICS pilot studies, or with a dedicated advisory board?

As with the questions and answers, the recommendations are based on the literature on what makes citizen science impactful, and they have been validated through interviews with project coordinators. You can see which questions and their scores relate to the recommendations at https://about.mics.tools/indicators – we want to be completely transparent with where all the information MICS provides is coming from.

How do you hope users will use the MICS assessment tools, and how do you foresee this helping new or continued citizen science projects?

There are so many ways you can use the MICS tools. When planning a citizen science project, users can read the online guidance on how to co-design for impact. When a project starts, they might use the standardised impact assessment tool to predict the impact that their project might have; or read the recommendations on how to improve that impact. At the end of the project, they might use the assessment to write reports on what impacts the project had. A couple of projects – Cos4Cloud and MONOCLE – have already used their MICS impact reports to help write sections of project deliverables on evaluating project impacts. You can read more about how they used MICS here.

Many of your pilot studies focused on nature-based citizen science initiatives, how did you ensure that these tools were transferable to other citizen science initiatives, based in other fields of science and research?

By ensuring our literature review on impact assessment covered a wide array of citizen science projects, and by interviewing project coordinators from different fields, we were able to ensure that the MICS tools were applicable to any type of citizen science project. Despite MICS focusing on nature-based solutions, and coordinators Earthwatch Europe being an environmental charity, we wanted to assess the impact of citizen science projects on more than just the environment; hence our considering other domains within MICS – so there should be something relevant for everyone.

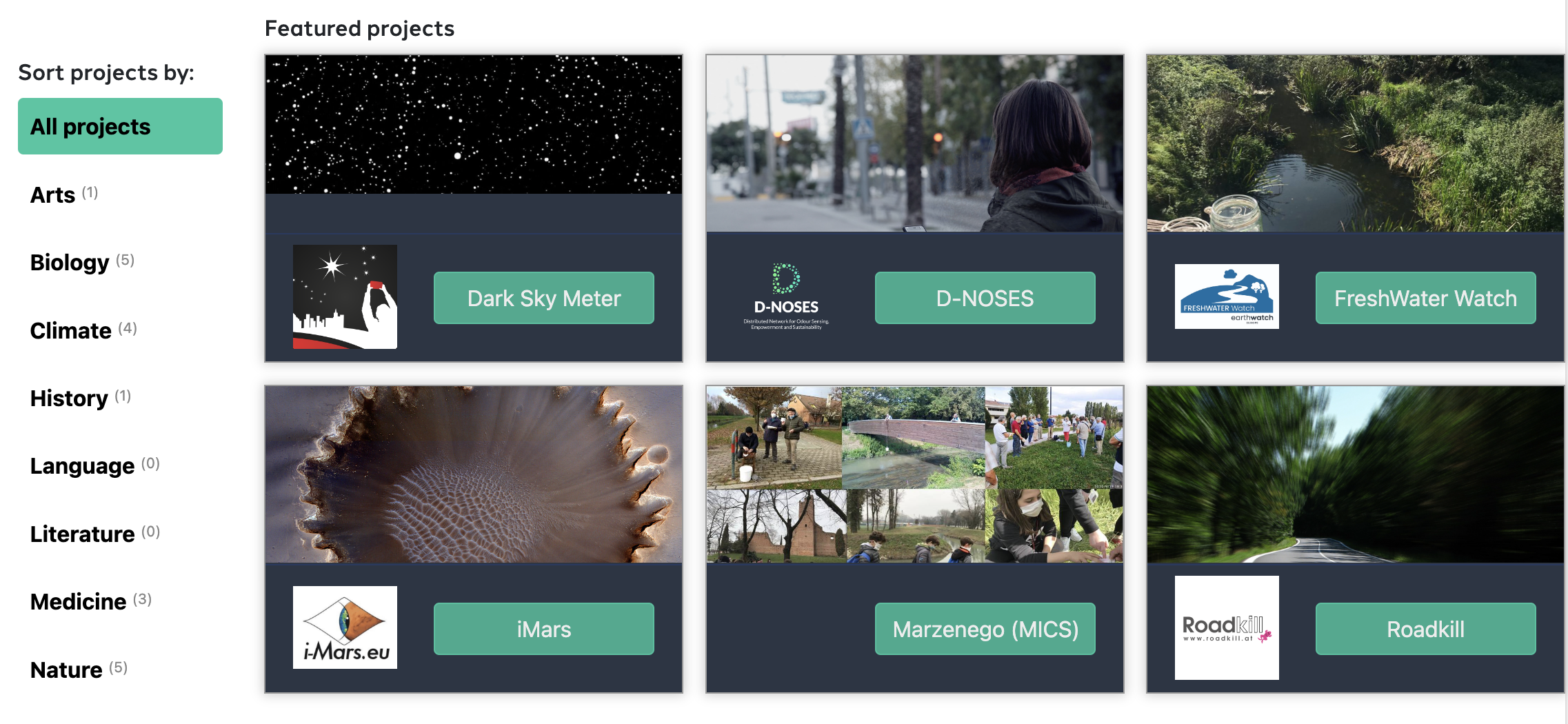

Another major part of the platform is the project catalog, showcasing citizen science projects, and their score, can you talk a little about why it was important to include these profiles on the platform?

Essentially it facilitates the exchange of ideas and best practices. We want projects with a high impact to be able to show this off and be proud of it. Equally, we want project coordinators with less experience in creating or measuring impact to be able to find examples of projects that have done this well. Each month we also select a #MICSProjectOfTheMonth to showcase on Twitter. It’s a way of thanking our users for taking the time to use the platform and highlighting other projects that we think are examples of impactful citizen science projects. Follow us to learn more about our user projects.

What is in store for the future of the MICS platform?

We are always looking to improve the platform; if anyone has any comments, questions, or feedback, we absolutely welcome it. Please email me at swoods@earthwatch.org.uk